Oracle SQL / PLSQL

PL/SQL is a procedural language designed specifically to embrace SQL statements within its syntax. PL/SQL program units are compiled by the Oracle Database server and stored inside the database. And at run-time, both PL/SQL and SQL run within the same server process, bringing optimal efficiency. PL/SQL automatically inherits the robustness, security, and portability of the Oracle Database.

PL/SQL is a powerful yet straightforward database programming language. It is easy to write and read and comes packed with lots of out-of-the-box optimizations and security features.

Building and Managing PL/SQL Program Units

- Building with Blocks: PL/SQL is a block-structured language consisting of three sections; declaration, executable, and exception handling sections. Familiarity with blocks is critical to writing the right code that promises manageability and scalability.

- Controlling the Flow of Execution: It provides procedural and sequential capabilities to execute complex paths --conditional branching and iterative processing in PL/SQL.

- Wrap Your Code in a Neat Package: Package is the schema object which acts as the fundamental building blocks of any high-quality PL/SQL-based application by logically relating variables, constants, and exceptions.

- Picking Your Packages: For the encapsulation of your program logic to ensure readability and manageability over time-- Concepts and benefits of PL/SQL packages.

- Error Management: An exploration of error management features in PL/SQL

- The Data Dictionary: Make Views Work for You: Use several critical data dictionary views to analyze and manage your code

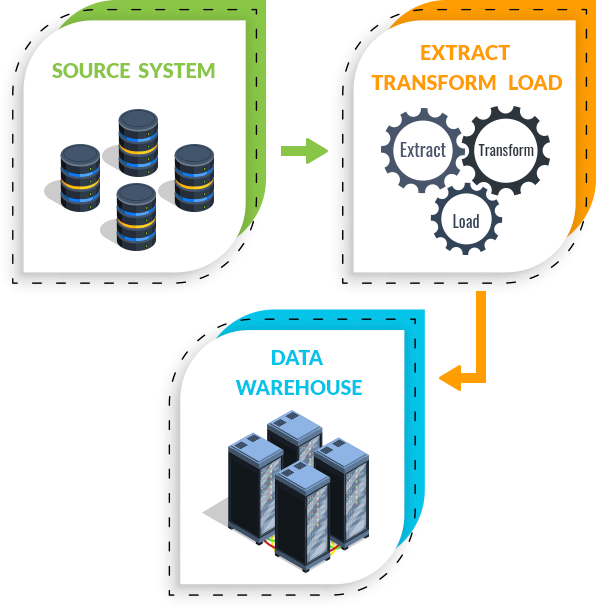

We understand that very few business types rely on a single data type or system. The need for complex and intelligent data management strategies varies for each business and organization. For ETL's accomplishment, our team considers and explores the variety of connectors you’ll need along with its portability and ease of use, whether you’ll need open-source tools for flexibility.

SQL Server Integration Services (SSIS)

Integration Services is a platform for building enterprise-level data integration and data transformations solutions. Use Integration Services to solve complex business problems by copying or downloading files, loading data warehouses, cleansing and mining data, and managing SQL Server objects and data.

Integration Services can extract and transform data from a wide variety of sources such as XML data files, flat files, and relational data sources, and then load the data into one or more destinations.

Integration Services includes a rich set of built-in tasks and transformations, graphical tools for building packages, and the Integration Services Catalog database, where you store, run, and manage packages.

You can use the graphical Integration Services tools to create solutions without writing a single line of code. You can also program the extensive Integration Services object model to create packages programmatically and code custom tasks and other package objects.